Data Quality Assessment

As obvious as its benefits may sound, many organizations have not thought of assessing the quality of the data residing in their mission-critical

systems. Due to a variety of business drivers, there is a clear need to establish an enterprise-wide Digital Backbone of integrated applications.

Once such a backbone is established, mission-critical data can seamlessly flow across applications, legacy systems can be synchronized with newer applications, and over a period of time applications can be consolidated by migrating them to a new system (s). During the application integration and/or migration projects, having a thorough assessment of the quality of the data is essential. With such assessment, data transformation rules can be established that cover all classification of data, anomalies in the data, and patterns in the data.

In addition, data quality assessment can lead to on-going data correction and repair cycle leading to substantial improvement in the quality of mission-critical data.

Once such a backbone is established, mission-critical data can seamlessly flow across applications, legacy systems can be synchronized with newer applications, and over a period of time applications can be consolidated by migrating them to a new system (s). During the application integration and/or migration projects, having a thorough assessment of the quality of the data is essential. With such assessment, data transformation rules can be established that cover all classification of data, anomalies in the data, and patterns in the data.

In addition, data quality assessment can lead to on-going data correction and repair cycle leading to substantial improvement in the quality of mission-critical data.

More...

eQube®-DP assesses data quality and provides data correction / repair capabilities. It interrogates the data in legacy systems / enterprise

systems to understand its 'quality'. It identifies anomalies, similarities, and patterns in the data. Such qualification of data then

can be used not only to correct and repair the data, but also as an essential step in establishing transformation rules for

application migration and integration.

eQube®-DP is used during the data discovery phase to understand various facets of data including data quality. eQube®-DP assesses data quality and provides data correction and repair capabilities.

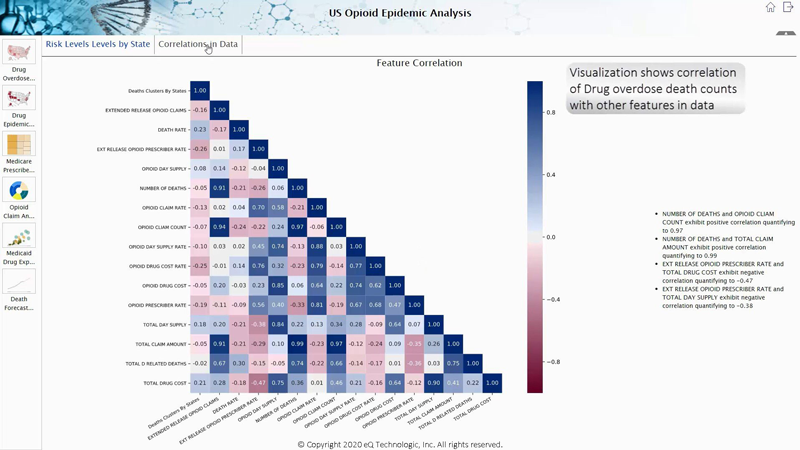

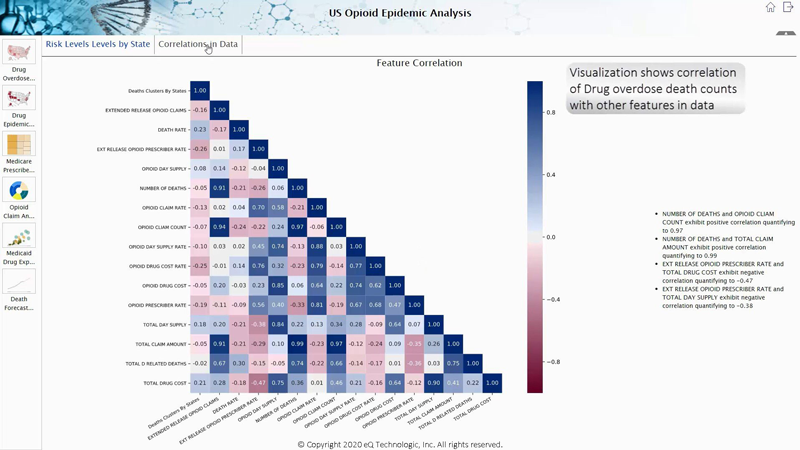

eQube®-DP analyzes data from disparate systems and provides quality assessment based on semantics. For example; 'part' is defined as a semantic concept which is represented by multiple objects / relationships in different systems. eQube®-DP can identify commonalties, correlations, and differences ('quality') for 'part' irrespective of its data structure in those systems. Such qualification of data then can be used not only to correct and repair the data, but also as an essential step in establishing transformation rules for application migration and integration.

Legacy systems tend to be several years old and during those years, business rules / business conditions tend to change substantially. Businesses / divisions merge, are divested or purchased, leading to data quality issues in systems. It is prudent for any business to perform a thorough data quality assessment across mission-critical applications.

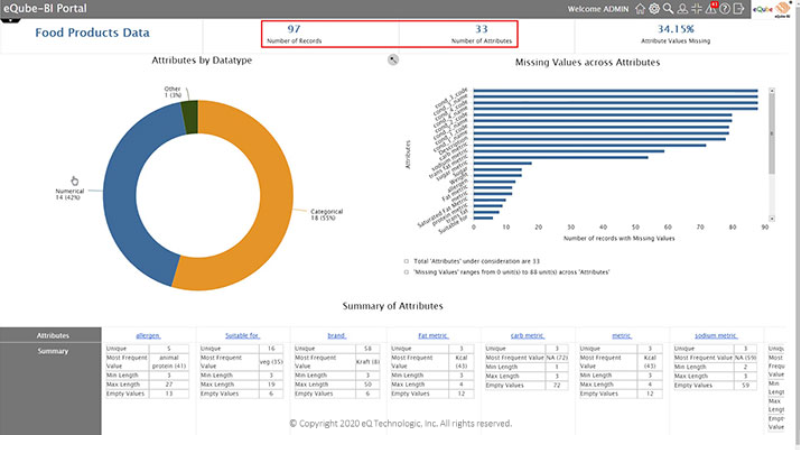

Data quality assessments can identify classification of data, anomalies in the data, similarities and patterns in data. eQube®-DP has extensive capabilities to interrogate data and establish a quality assessment of that data. Furthermore, it has the abilities to create rules on the fly and apply them to the data to pinpoint anomalies in the data and/or to identify patterns in the data. Following diagram shows examples of eQube®-DP based analysis.

Such data quality assessment can lead to establishing comprehensive rules for data transformation during application

integration / migration projects. As per eQ's proven IETLV methodology for application integration and migration, eQ

recommends the use of eQube®-DP up-front in the project.

Such data quality assessment can lead to establishing comprehensive rules for data transformation during application

integration / migration projects. As per eQ's proven IETLV methodology for application integration and migration, eQ

recommends the use of eQube®-DP up-front in the project.

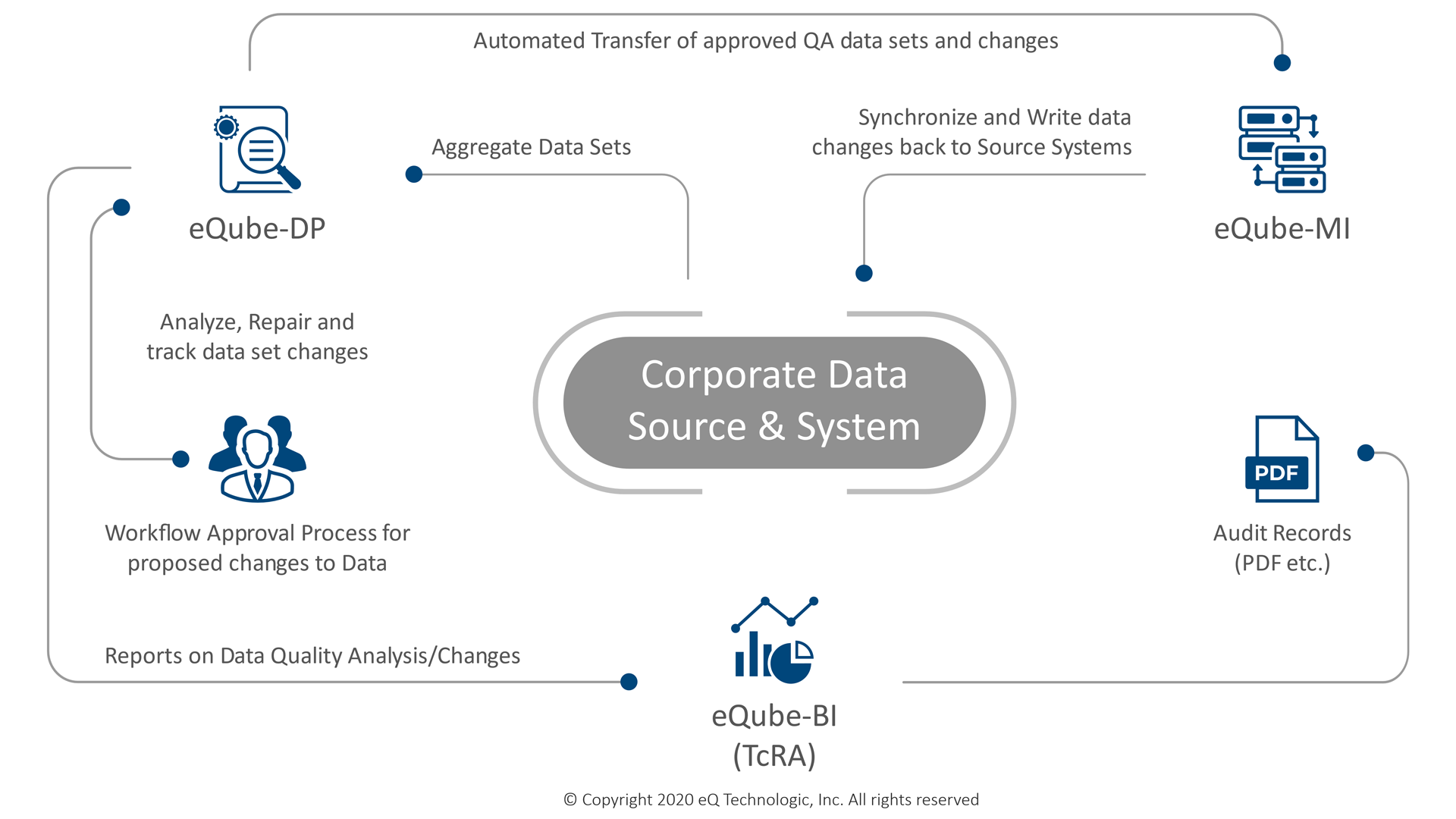

In addition, eQube®-DP allows for data correction and repair. If the customer desires to correct / repair the data in the source system (s), then utilizing eQube®-MI, under stringent governance and a comprehensive data correction / repair approval workflow (s), data can be corrected in the source systems.

eQube®-DP is used during the data discovery phase to understand various facets of data including data quality. eQube®-DP assesses data quality and provides data correction and repair capabilities.

eQube®-DP analyzes data from disparate systems and provides quality assessment based on semantics. For example; 'part' is defined as a semantic concept which is represented by multiple objects / relationships in different systems. eQube®-DP can identify commonalties, correlations, and differences ('quality') for 'part' irrespective of its data structure in those systems. Such qualification of data then can be used not only to correct and repair the data, but also as an essential step in establishing transformation rules for application migration and integration.

Legacy systems tend to be several years old and during those years, business rules / business conditions tend to change substantially. Businesses / divisions merge, are divested or purchased, leading to data quality issues in systems. It is prudent for any business to perform a thorough data quality assessment across mission-critical applications.

Data quality assessments can identify classification of data, anomalies in the data, similarities and patterns in data. eQube®-DP has extensive capabilities to interrogate data and establish a quality assessment of that data. Furthermore, it has the abilities to create rules on the fly and apply them to the data to pinpoint anomalies in the data and/or to identify patterns in the data. Following diagram shows examples of eQube®-DP based analysis.

In addition, eQube®-DP allows for data correction and repair. If the customer desires to correct / repair the data in the source system (s), then utilizing eQube®-MI, under stringent governance and a comprehensive data correction / repair approval workflow (s), data can be corrected in the source systems.

Less...

Continuous data quality health monitoring

eQube®-BI democratizes BI. It puts the power of analytics in the hands of end users.

It unshackles end users to analyze live enterprise-wide data on-demand while honoring the security rules of the underlying applications.

For too long, end users have been relying on power-users or internal IT developers to develop business critical reports, KPIs, or dashboards. In a governed environment, these analytics artifacts are well defined and approved by the business before they are put in production. They are typically developed by power-users or IT developers and are published at predefined frequencies or schedules (such as daily, weekly, monthly, etc.).

This governed analytics approach is most appropriate to ensure consistency of analysis across any organization. However, it can take weeks or more to productionize these artifacts. With the proliferation of streaming / sensory data, needs for data scientists are also exploding.

For too long, end users have been relying on power-users or internal IT developers to develop business critical reports, KPIs, or dashboards. In a governed environment, these analytics artifacts are well defined and approved by the business before they are put in production. They are typically developed by power-users or IT developers and are published at predefined frequencies or schedules (such as daily, weekly, monthly, etc.).

This governed analytics approach is most appropriate to ensure consistency of analysis across any organization. However, it can take weeks or more to productionize these artifacts. With the proliferation of streaming / sensory data, needs for data scientists are also exploding.

More...

Data scientists need to harness and analyze Big data to gain insights that are business critical.

Many times, they need to aggregate Big data with core business applications’ data.

With the pace of change in any business, end users need to have on-demand access to data spread across the enterprise.

In addition, some of the end users have become ‘Citizen Data Scientists’ and have a need to rapidly build the analytics views to aid business leaders for timely decision-making. To effectively address the needs of power-users, end users, data scientists, and citizen data scientists, there is a clear need for a bi-modal modern A / BI platform.

In addition, some of the end users have become ‘Citizen Data Scientists’ and have a need to rapidly build the analytics views to aid business leaders for timely decision-making. To effectively address the needs of power-users, end users, data scientists, and citizen data scientists, there is a clear need for a bi-modal modern A / BI platform.